AI Agent Development Tools 2026: Complete Stack Comparison (LangChain vs AutoGPT vs CrewAI)

📧 Subscribe to JavaScript Insights

Get the latest JavaScript tutorials, career tips, and industry insights delivered to your inbox weekly.

Choosing the wrong AI agent framework costs you weeks of refactoring and thousands of dollars in wasted development time. I learned this the hard way after building my first production agent with a tool that couldn't scale beyond the initial demo. Three months later, I rewrote everything from scratch using a different framework.

The AI agent development landscape has exploded over the past eighteen months. What started with a handful of experimental libraries has evolved into a complex ecosystem where making the right choice requires understanding subtle trade-offs between flexibility, performance, and ease of use. This isn't about finding the "best" tool because no such thing exists. It's about matching your specific requirements to the framework that solves your particular problems.

I've built production AI agents using LangChain, AutoGPT, CrewAI, and several smaller frameworks. I've deployed systems handling thousands of daily interactions for paying clients. This comparison comes from real-world experience, not documentation reading or theoretical analysis. Every framework mentioned here has strengths and serious limitations that only become apparent when you ship actual products.

Understanding What AI Agent Frameworks Actually Do

Before comparing specific tools, you need to understand what these frameworks provide and why you might need them at all. At the most basic level, an AI agent framework handles the complexity of connecting language models to external tools, managing conversation memory, and orchestrating multi-step reasoning processes.

Think of an AI agent as a program that can plan, execute actions, and adapt based on results. When a user asks your customer support agent to check order status, the agent needs to understand the question, query your database, interpret the results, and format a helpful response. A framework provides the scaffolding for this workflow so you're not reinventing basic patterns.

The core components that every serious framework must handle include prompt management, tool integration, memory systems, and error handling. Prompt management means structuring the instructions and context you send to the language model. Tool integration provides the mechanism for agents to call functions, APIs, or external services. Memory systems store conversation history and relevant context. Error handling ensures your agent degrades gracefully when things go wrong.

Without a framework, you'd write hundreds of lines of boilerplate code managing API calls, parsing responses, handling rate limits, and orchestrating multi-step workflows. Frameworks abstract these patterns into reusable components so you focus on business logic rather than plumbing. The question isn't whether to use a framework but which one matches your needs.

Different frameworks make different architectural decisions that profoundly impact how you build agents. Some optimize for flexibility, giving you fine-grained control at the cost of complexity. Others prioritize simplicity, offering opinionated patterns that work well for common cases but struggle with custom requirements. Understanding these trade-offs helps you choose wisely.

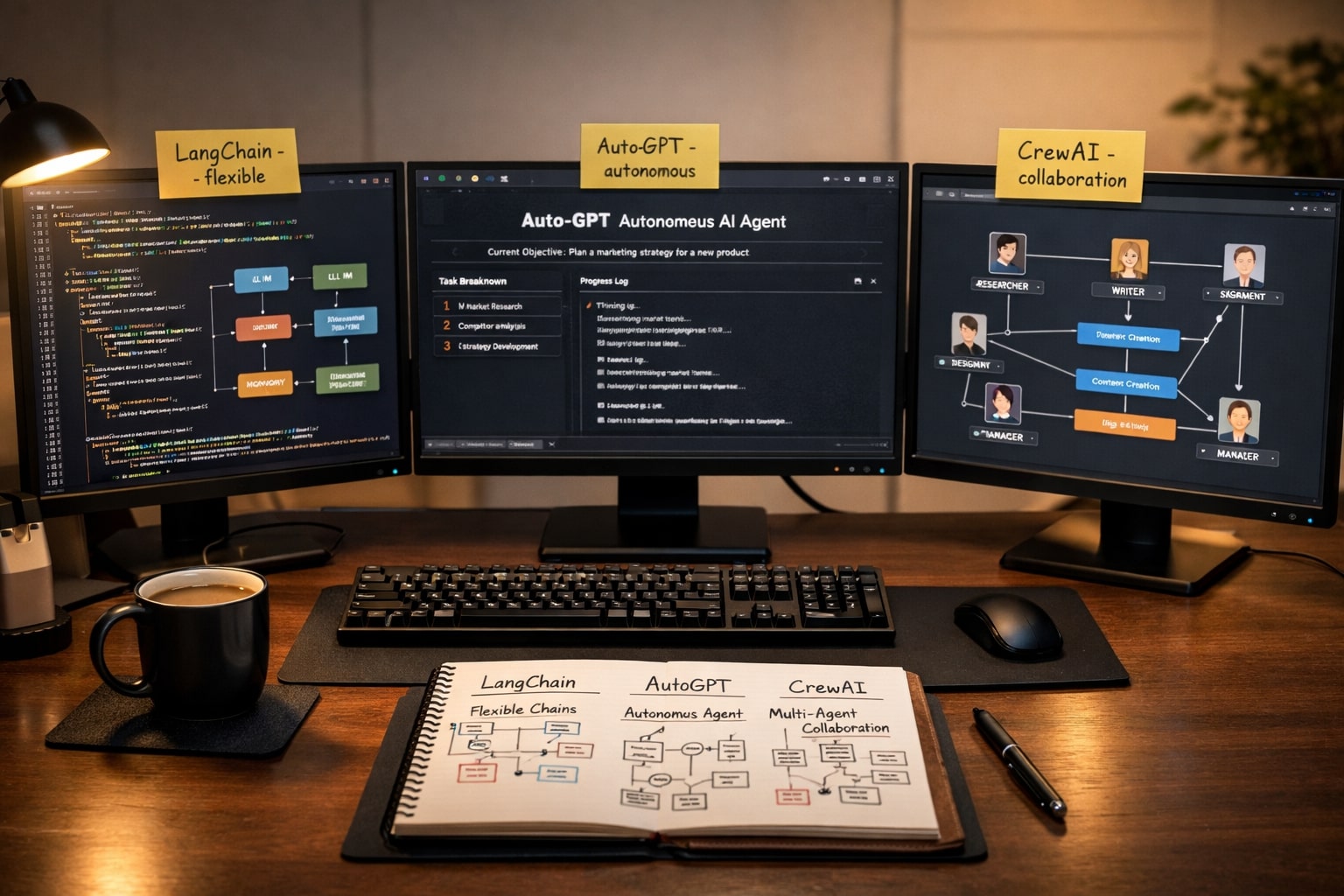

LangChain: The Swiss Army Knife of AI Development

LangChain emerged as the first comprehensive framework for building language model applications and quickly became the de facto standard. The project has grown into a massive ecosystem with support for virtually every language model, vector database, and integration you might need.

The core philosophy of LangChain centers on composability. Everything is a chain of components that you connect together. Want to retrieve documents from a vector store, stuff them into a prompt, send them to a language model, and parse the output? LangChain provides components for each step that you wire together. This modularity offers tremendous flexibility when you need to customize behavior.

LangChain's strength lies in its breadth of integrations. Need to connect to Pinecone, Weaviate, or Chroma for vector search? LangChain supports all of them. Want to use OpenAI, Anthropic, or local models? All available. Planning to integrate with Google Drive, Slack, or GitHub? Pre-built tools exist for common platforms. This ecosystem means you spend less time writing integration code and more time building features.

The framework provides several key abstractions that simplify agent development. The LLM class handles communication with different language models through a consistent interface. The PromptTemplate system manages complex prompts with variables and formatting. The VectorStore abstraction lets you swap between different similarity search backends without changing application code. The Agent class orchestrates the reasoning loop where models decide what actions to take.

However, LangChain's flexibility comes with significant complexity. The learning curve is steep because the framework tries to solve every possible use case. Documentation, while extensive, can feel overwhelming when you're starting out. You'll find yourself reading through multiple abstraction layers to understand how components interact.

Performance becomes an issue when building production systems with LangChain. The abstraction layers add overhead that matters at scale. A simple agent call might traverse through five or six different classes before actually making an API request. This overhead translates to measurable latency that impacts user experience.

Version stability has been a persistent challenge with LangChain. The framework evolves rapidly, which means breaking changes happen frequently. Code that works perfectly with version 0.1.15 might break when you upgrade to 0.1.20. If you're building production systems, pin your versions carefully and budget time for upgrade testing.

The agent implementations in LangChain work well for demos but often require customization for production use. The default ReAct agent, for example, makes reasonable decisions but struggles with complex multi-step planning. You'll find yourself either accepting limitations or diving deep into the internals to customize behavior.

Despite these challenges, LangChain remains the best choice for projects requiring extensive integrations or experimentation with different approaches. When you're not sure exactly what you need or expect requirements to evolve significantly, LangChain's flexibility justifies the complexity cost. The framework really shines when you need to quickly prototype different architectures or swap components to test alternatives.

Real-world usage shows LangChain working best for research projects, proof-of-concepts, and applications where you need access to the entire ecosystem of AI tools. If you're building AI agents as a side income, LangChain gives you the flexibility to handle diverse client requirements without switching frameworks.

AutoGPT: The Autonomous Agent Pioneer

AutoGPT represents a different philosophy entirely. Instead of providing components you assemble, it offers a complete autonomous agent system that can pursue goals with minimal human intervention. The project gained massive attention in 2023 for demonstrating agents that could break down complex tasks and work toward objectives independently.

The core concept behind AutoGPT is fascinating. You give the agent a goal like "research competitors in the coffee subscription market and create a comparison report." The agent then breaks this goal into sub-tasks, executes them, evaluates progress, and continues until it believes the goal is accomplished. This autonomous behavior differs fundamentally from traditional agent frameworks where you define the workflow.

AutoGPT maintains long-term memory across interactions, allowing it to build context over extended periods. The agent remembers previous actions, their outcomes, and lessons learned. This memory system enables more sophisticated behavior than single-turn interactions. An AutoGPT agent researching market trends might remember that certain sources provided poor information and avoid them in future searches.

The plugin system allows extending AutoGPT with custom capabilities. Want your agent to access proprietary APIs or internal databases? Write a plugin that exposes these functions to the agent. The plugin architecture makes AutoGPT surprisingly extensible despite being more opinionated than frameworks like LangChain.

However, the autonomous nature that makes AutoGPT interesting also creates significant challenges. Agents can go off on tangents, burning through API credits while pursuing irrelevant sub-goals. I once set an AutoGPT agent to research pricing strategies and watched it spend forty minutes exploring the history of currency systems before I intervened. Autonomous doesn't always mean efficient.

Cost management becomes critical with AutoGPT because agents make many sequential API calls as they reason through problems. A single task might require twenty or thirty language model interactions, each costing money. Without careful guardrails, development and testing can become expensive quickly. Production deployments need strict budget limits and timeouts to prevent runaway spending.

The unpredictability of autonomous agents makes them unsuitable for many business applications. When a customer asks your support agent about order status, you need a predictable response time and reliable behavior. AutoGPT's exploratory approach works poorly for scenarios requiring consistency and speed. The agent might find creative solutions, but users waiting three minutes for simple answers will be frustrated.

Setting appropriate goals requires skill because AutoGPT agents interpret instructions literally. Vague goals lead to unexpected behavior. Overly specific goals defeat the purpose of autonomy. Finding the right level of direction takes practice and testing. You'll spend time refining how you phrase objectives to get desired behavior.

AutoGPT works best for research tasks, content generation, and scenarios where exploration matters more than efficiency. If you need an agent to investigate a topic thoroughly, synthesize information from multiple sources, and produce comprehensive reports, AutoGPT's autonomous nature becomes an advantage. The agent will dig deeper than scripted workflows typically reach.

The project has evolved significantly since its viral moment. The codebase has matured, adding better error handling, improved memory systems, and more sophisticated planning algorithms. However, the fundamental challenges of autonomous agents remain. You're trading control and predictability for creativity and thoroughness.

For production use, AutoGPT requires extensive customization and guardrails. You'll implement timeout mechanisms, cost limits, and quality checks on agent output. The autonomous agent becomes semi-autonomous with human oversight at critical decision points. This hybrid approach captures some benefits while managing risks.

Real-world applications of AutoGPT tend toward internal tools rather than customer-facing products. A research team might use AutoGPT to investigate market trends. A content team could leverage it for comprehensive topic research. These use cases tolerate longer execution times and occasional tangents in exchange for thorough results. Understanding how AI impacts developer productivity helps you decide when autonomous agents make sense versus traditional approaches.

CrewAI: The Multi-Agent Collaboration Framework

CrewAI emerged as developers recognized that complex tasks often require multiple specialized agents working together rather than a single general-purpose agent. The framework structures AI agents as crew members with specific roles collaborating toward shared objectives.

The core metaphor of CrewAI revolves around crews, agents, and tasks. A crew is a team of agents. Each agent has a role, backstory, and specific capabilities. Tasks get assigned to agents based on their specialization. The framework handles communication between agents and orchestrates their collaboration. This structure mirrors how human teams operate, making it intuitive for modeling real-world workflows.

Imagine building a content creation system. One agent researches topics and gathers information. Another agent writes first drafts based on research. A third agent edits for clarity and style. A fourth agent optimizes for SEO. Each agent specializes in one aspect of the workflow, and CrewAI coordinates their sequential or parallel execution.

The role-based approach solves a significant problem with general-purpose agents. When you ask a single agent to research, write, edit, and optimize, it often does none of these tasks particularly well. Specialized agents with focused prompts and tools perform their specific functions more reliably. This separation of concerns improves both quality and reliability.

CrewAI provides clear patterns for defining agent roles and relationships. You specify each agent's expertise, the tools they can access, and how they should approach their work. The framework manages passing information between agents, handling failures, and ensuring tasks complete in the correct order. This orchestration happens automatically once you define the crew structure.

The collaboration mechanisms in CrewAI support different workflows. Sequential execution works when tasks must happen in order, like research followed by writing followed by editing. Parallel execution handles tasks that can run simultaneously, like generating multiple variations of content. Hierarchical structures allow manager agents to delegate to specialist agents. This flexibility handles various real-world scenarios.

However, CrewAI introduces complexity through multi-agent coordination overhead. Each agent requires its own prompt engineering, tool configuration, and testing. Debugging becomes more challenging when issues could stem from any agent in the chain or from inter-agent communication. You're essentially building and maintaining multiple agents instead of one.

The sequential nature of many CrewAI workflows impacts latency. If your content creation crew has four agents running sequentially, the total execution time equals the sum of all individual agent executions. This cumulative delay makes CrewAI unsuitable for real-time interactions where users expect immediate responses. The framework works better for batch processes or background tasks.

Cost scales with the number of agents and their interactions. A crew of five agents might make fifteen language model calls to complete a single task. At production scale with hundreds of daily executions, these costs add up quickly. You'll need careful optimization to keep economics favorable. Consider caching intermediate results, using smaller models for simple agents, and batching similar requests.

The framework shines when you're building complex workflows that genuinely benefit from specialization. A market research system that needs to gather data, analyze trends, generate insights, and create presentations naturally maps to specialized agents. Each agent focuses on what it does best, and the coordination overhead pays for itself through improved quality.

CrewAI works particularly well for content generation pipelines, research workflows, and multi-step analysis tasks. These scenarios tolerate longer execution times in exchange for higher quality results. The framework becomes less suitable for interactive applications, simple queries, or anything requiring sub-second response times.

Integration with existing systems requires thoughtful planning. CrewAI agents need access to your data sources, APIs, and tools. Setting up these connections for multiple agents takes more work than configuring a single agent. However, once established, the modular structure makes it easy to add new capabilities by introducing new specialist agents rather than modifying existing ones.

The community around CrewAI has grown rapidly, contributing agent templates, workflow patterns, and integration examples. This ecosystem accelerates development when you can find reference implementations similar to your use case. The framework's opinionated structure also means less variation in how people build systems, making it easier to understand and adapt community contributions.

For developers building production AI agent systems, CrewAI represents the middle ground between AutoGPT's full autonomy and LangChain's granular control. You get structured collaboration without writing extensive orchestration logic. The trade-off is accepting the framework's opinions about how multi-agent systems should work. When those opinions align with your requirements, CrewAI significantly accelerates development.

Comparing Performance and Reliability Across Frameworks

Performance characteristics vary dramatically between frameworks, and these differences impact both user experience and operational costs. Understanding real-world performance helps you make informed decisions based on your specific requirements rather than theoretical capabilities.

Latency measures the time from request to response. LangChain typically adds 100-300ms of overhead compared to calling language model APIs directly. This overhead comes from abstraction layers, component initialization, and internal routing. For most applications, this latency is acceptable. However, real-time chat interfaces or high-frequency trading systems need every millisecond.

AutoGPT's latency profile differs because agents make multiple sequential calls. A single user request might trigger ten language model interactions as the agent reasons through the task. Total latency can reach tens of seconds or even minutes. This makes AutoGPT inappropriate for interactive applications but acceptable for background processing.

CrewAI latency depends on crew structure and task complexity. Sequential crews multiply individual agent latencies. A crew with five agents running sequentially might take twenty seconds to complete a workflow where each agent averages four seconds. Parallel execution helps when tasks are independent, but coordination overhead remains.

Reliability encompasses how consistently frameworks produce correct results and handle errors gracefully. LangChain's reliability depends heavily on how you implement error handling. The framework provides tools for retries, fallbacks, and graceful degradation, but you must configure them properly. Default configurations often lack production-ready error handling.

AutoGPT's autonomous nature makes reliability challenging. Agents might pursue unexpected approaches to goals, leading to unpredictable results. One execution might succeed perfectly while another fails spectacularly despite identical inputs. This variability makes thorough testing essential. You can't assume consistent behavior across executions.

CrewAI reliability improves through specialization. When agents have narrow, well-defined responsibilities, their behavior becomes more predictable. However, the framework introduces new failure modes through inter-agent communication. A failure in one agent might cascade through the entire crew if not handled properly. Implementing robust error handling at each stage becomes critical.

Resource consumption matters for operational costs and scalability. LangChain itself is relatively lightweight, but your total resource usage depends on what components you include. Vector stores, embedding models, and language models consume the bulk of resources. The framework adds minimal overhead beyond what these components naturally require.

AutoGPT can be resource-intensive because autonomous agents make many API calls. A single task might consume ten to twenty times more tokens than a direct implementation. This multiplier effect impacts both costs and rate limits. Budget accordingly and implement usage caps to prevent unexpected expenses.

CrewAI resource consumption scales with crew size and complexity. Each agent potentially makes separate API calls, accesses vector stores, and performs computations. A crew of five agents might use five times the resources of a single agent, though optimization through caching and result reuse can reduce this multiplier.

Error recovery capabilities determine how frameworks handle failures. LangChain provides retry logic, alternative model fallbacks, and error callbacks. You configure these mechanisms based on your reliability requirements. The framework gives you the tools but doesn't enforce particular patterns.

AutoGPT agents can sometimes recover from errors autonomously by trying alternative approaches. This self-correction capability is powerful but unpredictable. Sometimes agents work around problems cleverly. Other times they enter loops or abandon goals. You need monitoring and intervention mechanisms rather than relying on autonomous recovery.

CrewAI handles errors at both individual agent and crew levels. An agent failure might trigger a retry, fallback to a different agent, or halt the entire crew depending on configuration. The framework provides reasonable defaults, but production systems require custom error handling that considers business impact.

Choosing the Right Framework for Your Project

Selecting an AI agent framework requires matching your specific requirements against each framework's strengths and limitations. No single framework excels at everything, so the best choice depends entirely on your use case, constraints, and priorities.

Start by clarifying your primary requirements. Are you building an interactive chat system that needs sub-second responses? AutoGPT is immediately disqualified. Do you need extensive integrations with various data sources and services? LangChain's ecosystem becomes compelling. Is your workflow naturally modeled as specialized agents collaborating? CrewAI makes sense.

Consider your team's technical sophistication. LangChain rewards deep expertise because its flexibility enables powerful customization. However, this same flexibility overwhelms beginners who face too many choices. CrewAI's opinionated structure helps newer teams by constraining decisions to proven patterns. AutoGPT requires the least framework knowledge but demands the most AI expertise to set effective goals and constraints.

Budget constraints significantly influence framework selection. If you're building a side project or proof-of-concept with limited resources, AutoGPT's high token consumption might be prohibitive. LangChain allows fine-tuning costs through model selection and caching strategies. CrewAI's multi-agent nature naturally costs more but delivers proportional value when specialization improves quality.

Time-to-market considerations matter for commercial projects. LangChain's learning curve delays initial progress but pays off with flexibility later. CrewAI's structure accelerates development once you understand the patterns. AutoGPT might deliver impressive demos quickly but require extensive refinement for production use.

Maintenance burden increases with framework complexity. LangChain systems often need ongoing updates as the framework evolves. AutoGPT agents require monitoring and adjustment as their behavior drifts. CrewAI crews need maintenance across multiple agents. Simpler approaches reduce long-term maintenance even if initial development takes longer.

Real-world project patterns suggest guidelines. Customer support chatbots performing simple tasks work well with lightweight LangChain implementations. Complex research workflows benefit from CrewAI's multi-agent structure. Internal tools exploring possibilities might leverage AutoGPT's autonomy despite its unpredictability.

The decision often comes down to control versus convenience. LangChain gives you maximum control at the cost of complexity. AutoGPT provides autonomous behavior at the cost of predictability. CrewAI offers structured collaboration at the cost of coordination overhead. Choose based on which trade-off aligns with your priorities.

Building Your First Agent: Practical Implementation Guide

Theory matters less than practice when you're actually building production systems. Let me walk you through implementing a realistic AI agent using each framework so you can see concrete differences in development experience and code complexity.

Our example agent handles customer support for an e-commerce business. The agent needs to answer product questions by searching a knowledge base, check order status by querying a database, and escalate complex issues to humans. This workflow represents common real-world requirements without being trivially simple.

Starting with LangChain, you'll spend significant time configuring components. First, set up the language model wrapper, choosing between various providers. Then configure the vector store for knowledge base search, selecting an embedding model and similarity algorithm. Next, create tools for order lookup and escalation. Finally, wire everything together with an agent executor that orchestrates the workflow.

The LangChain implementation gives you fine-grained control over each component. You can optimize vector search parameters, customize prompt templates for different scenarios, and implement sophisticated error handling. However, you'll write 200-300 lines of code before having a working agent. This investment pays off when requirements evolve because modifying specific components is straightforward.

With CrewAI, you'd structure this as multiple specialized agents. A research agent handles knowledge base searches. A data agent performs order lookups. A decision agent determines whether to escalate. The manager agent coordinates their collaboration. This separation makes each agent simpler but introduces coordination complexity.

The CrewAI version requires less code per agent but more overall structure. You define each agent's role, capabilities, and tools. Then you specify the crew structure and task flow. The framework handles orchestration automatically. The total codebase might be similar in size to LangChain but organized differently. Understanding one agent's behavior is simpler, but understanding the entire system requires grasping multiple agents and their interactions.

AutoGPT takes a fundamentally different approach. You'd give the agent a goal like "help the customer with their inquiry by searching relevant information and checking order status if needed." The agent then autonomously decides how to accomplish this goal. You provide tools for knowledge search and order lookup, but the agent determines when and how to use them.

The AutoGPT implementation requires the least framework-specific code but the most prompt engineering. Getting the agent to reliably follow appropriate workflows takes extensive testing and goal refinement. You'll spend time constraining the agent's autonomy to prevent expensive tangents while preserving useful flexibility.

Integration Patterns and Production Considerations

Moving from development to production requires addressing concerns that demos often ignore. Integration with existing systems, monitoring, error handling, and cost management become critical when real users and real money are involved.

API integration patterns differ across frameworks. LangChain provides explicit tool classes that wrap external APIs. You implement a run method that takes parameters and returns results. The framework handles serialization, retries, and error propagation. This explicit approach works well when you need precise control over API behavior.

CrewAI tools follow similar patterns but you assign them to specific agents based on role appropriateness. Your data agent gets order lookup tools while your research agent gets search tools. This separation prevents agents from accessing capabilities outside their domain, which can improve reliability and security.

AutoGPT plugins provide API access through a different mechanism. Plugins register functions the agent can discover and call. The autonomous agent decides when plugins are relevant rather than following explicit workflows. This flexibility enables creative problem-solving but makes behavior less predictable.

Database integration requires careful consideration of security and performance. LangChain's SQL database chains provide safe query generation that prevents injection attacks. However, performance optimization often requires custom implementations that bypass framework abstractions. Vector databases integrate naturally through LangChain's retriever abstraction.

CrewAI database access typically happens through designated data agents. Centralizing database logic in specific agents makes security auditing easier and enables caching strategies that benefit the entire crew. However, agents might request redundant data if coordination isn't carefully managed.

Monitoring production AI agents differs from traditional application monitoring. You need to track token usage, response latency, error rates, and quality metrics like user satisfaction or task completion rates. LangChain supports various callback handlers that emit metrics throughout execution. Implementing comprehensive monitoring requires custom callback classes.

Cost tracking becomes essential when language model APIs charge per token. Logging input and output tokens for every call enables cost analysis and optimization. You might discover certain prompts wastefully consume tokens or specific agents dominate expenses. This visibility drives optimization decisions.

Version control and deployment strategies must account for prompt engineering. Your prompts are effectively code that evolves through testing and refinement. Storing prompts in version control, implementing staged rollouts, and maintaining prompt testing suites becomes standard practice. Changes to prompts can impact behavior as significantly as code changes.

Testing AI agents presents unique challenges because responses vary between runs. Traditional unit tests that expect specific outputs fail with language models. Instead, implement evaluation frameworks that score responses on relevant criteria. Does the response address the user's question? Is the tone appropriate? Are facts accurate? These qualitative assessments replace deterministic assertions.

Advanced Techniques and Optimization Strategies

Once your basic agent works, optimization separates adequate systems from exceptional ones. These advanced techniques improve performance, reduce costs, and enhance reliability across all frameworks.

Prompt caching dramatically reduces costs and latency for repeated queries. Many frameworks support caching language model responses based on input prompts. When a user asks about shipping policies for the third time today, serve the cached response instead of making another API call. Implement cache invalidation strategies that refresh stale information appropriately.

Response streaming improves perceived performance for long-running tasks. Instead of waiting thirty seconds for a complete response, stream partial results as they're generated. Users see progress immediately, making the system feel faster even if total execution time remains unchanged. LangChain and most modern frameworks support streaming through callback handlers.

Model cascading optimizes costs by using expensive models only when necessary. Start with fast, cheap models like GPT-3.5 Turbo for simple queries. If the response lacks confidence or the query seems complex, escalate to GPT-4 or Claude. This tiered approach balances cost with quality. Implement confidence scoring to make escalation decisions reliably.

Parallel execution reduces latency when multiple independent operations are needed. If your agent needs to search a knowledge base and query an API, run these operations simultaneously rather than sequentially. LangChain supports parallel chains through specific composition patterns. CrewAI enables parallel agent execution when tasks are independent.

Semantic caching takes traditional caching further by recognizing semantically similar queries. Questions like "how do I return an item?" and "what's your return policy?" should return cached results even though the text differs. Implement embedding-based similarity search to detect equivalent queries and serve cached responses.

Fine-tuning models for specific tasks can improve both quality and cost-effectiveness. If your agent handles technical support for a specific product, fine-tuning on historical support conversations creates a specialized model that outperforms general-purpose models. The upfront investment in fine-tuning pays off with better results and potentially lower per-query costs.

Monitoring and observability enable continuous improvement. Track which queries fail, where agents get stuck, and what operations consume the most resources. This data drives optimization priorities. You might discover that 80% of costs come from 20% of query types, suggesting where optimization effort provides maximum return.

Common Mistakes and How to Avoid Them

Learning from others' mistakes accelerates your progress and prevents expensive errors. These pitfalls catch most developers at some point, but awareness helps you avoid or quickly recover from them.

Over-engineering solutions is perhaps the most common mistake. Developers see LangChain's capabilities and build elaborate multi-chain systems for problems that need simple prompts. Start with the simplest approach that might work. Add complexity only when simpler solutions prove insufficient. Many successful production agents use straightforward implementations rather than framework exotica.

Inadequate testing leads to embarrassing failures once real users interact with your agent. Language models behave unpredictably, so thorough testing across diverse inputs becomes essential. Create test suites covering happy paths, edge cases, adversarial inputs, and failure scenarios. Automated testing catches regressions as you modify prompts or upgrade framework versions.

Ignoring cost implications creates budget surprises. Small inefficiencies compound at scale. An extra 200 tokens per query seems insignificant until you're processing 10,000 daily queries and spending an additional $500 monthly. Profile token usage early and optimize expensive operations. Implement budgets and alerts before costs spiral out of control.

Poor error handling creates terrible user experiences. When your agent encounters an error, users should receive helpful messages explaining what happened and what they can do. Silent failures or technical error dumps frustrate users and erode trust. Implement graceful degradation where agents remain partially functional even when some capabilities fail.

Neglecting security considerations exposes vulnerabilities. AI agents often access sensitive data and external systems. Implement proper authentication, authorization, and input validation. Consider what happens if malicious users craft inputs designed to manipulate agent behavior or extract unauthorized information. Treat your AI agent with the same security rigor as any production system.

The Future of AI Agent Frameworks

Understanding where this technology is heading helps you make better decisions today. Several trends are reshaping AI agent development and will influence which frameworks succeed long-term.

Model improvements continue at a rapid pace, making agents more capable with each generation. GPT-4 significantly outperforms GPT-3.5 at reasoning and tool use. Future models will be faster, cheaper, and more reliable. Frameworks that abstract model selection will age better than those tightly coupled to specific models. Your agent architecture should accommodate model upgrades without major refactoring.

Standardization efforts aim to reduce framework fragmentation. Multiple projects are working toward common interfaces for agents, tools, and workflows. Success in standardization would make frameworks more interoperable and reduce vendor lock-in. However, standardization also potentially reduces innovation as frameworks converge on common patterns.

Specialized agent frameworks are emerging for specific domains. Healthcare agents, legal research agents, and financial analysis agents benefit from domain-specific optimizations and compliance features. These vertical frameworks might outperform general-purpose tools for their target domains. Watch for specialization in your industry that could accelerate development.

Local model deployment is becoming practical as open-source models improve and hardware becomes more powerful. Running models on your infrastructure eliminates API costs, reduces latency, and improves privacy. Frameworks that support both API and local deployment provide flexibility as this landscape evolves. Understanding which developers will succeed as AI evolves helps you position yourself strategically.

Making Your Final Decision

After exploring frameworks in depth, you're equipped to make an informed decision. The right framework aligns with your specific requirements, team capabilities, and project constraints rather than being objectively "best."

Choose LangChain when you need maximum flexibility, have complex integration requirements, or expect requirements to evolve significantly. The framework's learning curve and complexity cost pays off through adaptability. Large teams with dedicated AI engineers extract the most value from LangChain's capabilities.

Select CrewAI when your workflow naturally maps to specialized agents collaborating. Content generation, research pipelines, and multi-step analysis tasks benefit from CrewAI's structure. Teams comfortable with opinionated frameworks will appreciate the guidance CrewAI provides through its collaboration patterns.

Consider AutoGPT for research tools, exploration tasks, or internal applications where autonomy provides value despite unpredictability. The framework works poorly for customer-facing applications requiring consistency but excels at thorough investigation and creative problem-solving.

Remember that you can use multiple frameworks in different parts of your system. A customer support platform might use LangChain for interactive chat while leveraging CrewAI for automated report generation and AutoGPT for market research. Choosing the right tool for each specific job often outperforms forcing a single framework everywhere.

Your first framework choice matters less than getting started. Practical experience with any framework teaches lessons applicable to all of them. Build something real, deploy it to actual users, and iterate based on feedback. The best framework is the one that helps you ship valuable products to users who care about results rather than implementation details.

The AI agent development landscape will continue evolving rapidly. New frameworks will emerge. Existing tools will add features. Models will improve. Your ability to evaluate tools, adapt to changes, and choose appropriately for each situation matters more than perfect initial decisions. Stay curious, keep learning, and focus on delivering value rather than using the coolest technology.